Chenru Duan, Yuanqi Du, Haojun Jia, and Heather J. Kulik (2023)

Highlighted by Jan Jensen

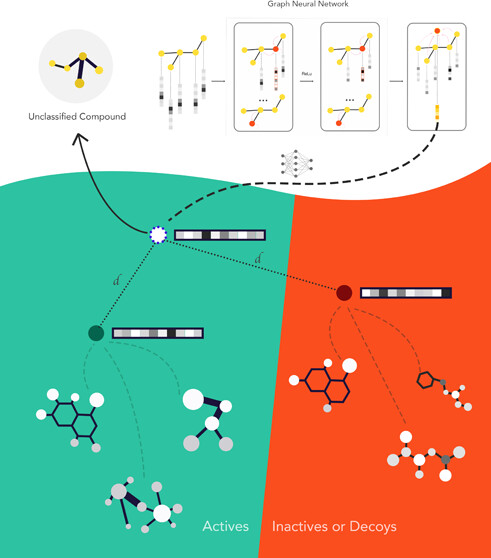

Part of Figure 1 from the paper.

As anyone who has tried it will know, finding TSs is one of the most difficult, fiddly, and frustrating tasks in computational chemistry. While there are several methods aimed at automating the process, they tend to have a mixed success rate or be computationally expensive and, often, both.

This paper looks to be an important first step in the right direction. The method produces a guess at a TS structure based on the coordinates of the reactants and products. Notably, the input structures need not be aligned or atom mapped!

The method achieves a median RMSD of 0.08 Å compared to the true TSs and it often so good that single point energy evaluation gives a reliable barrier. The method also predicts a confidence scoring model for uncertainty quantification, which allows you to a priori judge whether such a single point is sufficient or whether a TS search is warranted. The approach allows for accurate reaction barrier estimation (2.6 kcal/mol) with DFT optimizations needed for only 14% of the most challenging reactions.

So, the method's not going to do away with manual TS searches entirely, but it is going to be invaluable for large scale screening studies. As the authors note, the method can likely also be adapted to the prediction of barrier heights, which could potentially be used to pre-screen reactions on a much, much bigger scale.

The paper is an important proof-of-concept study, but needs to be trained on much larger data sets (note that it is only trained on C, N, and O containing molecules), which are non-trivial to obtain. But the method could likely be used to obtain these data sets in an iterative fashion.

This work is licensed under a Creative Commons Attribution 4.0 International License.